Evaluation Results

We evaluated the three subtasks separately.

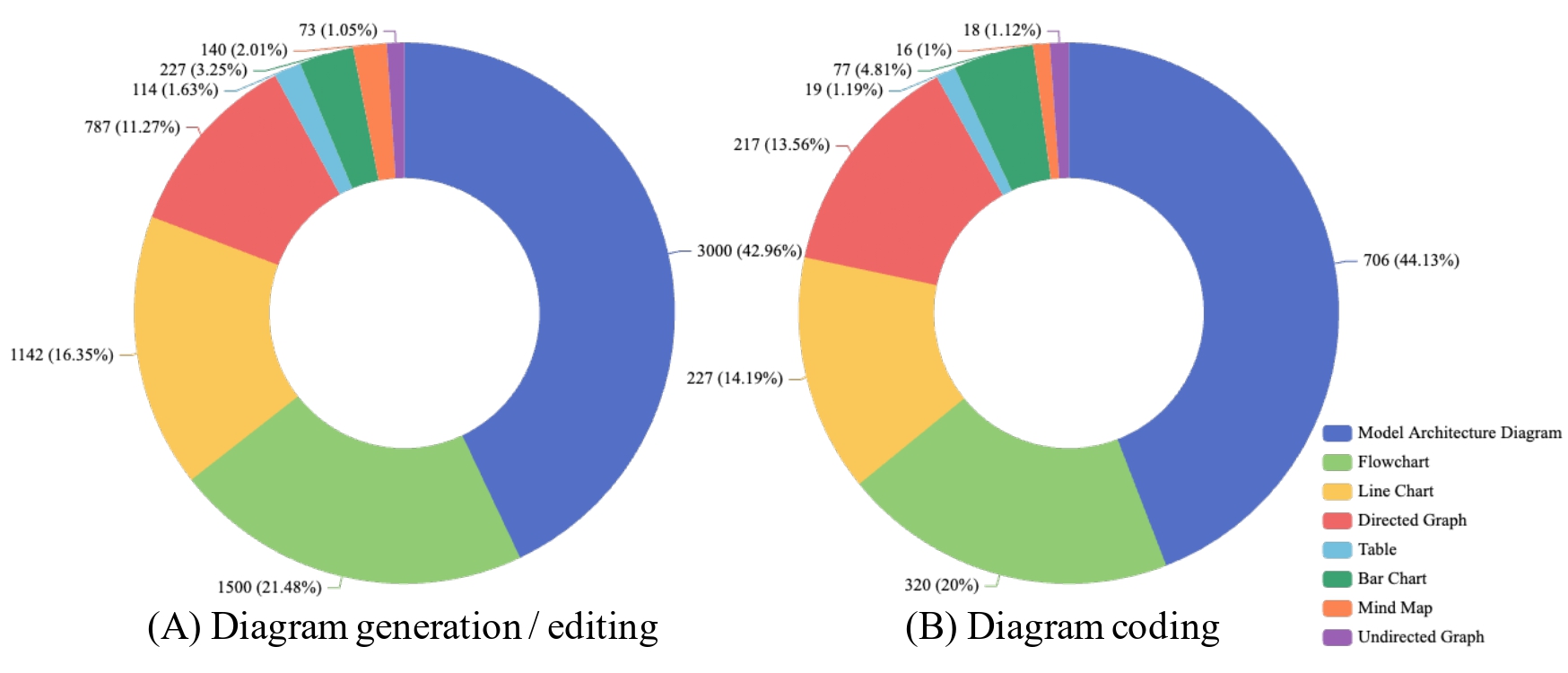

- Diagram Generation

- Diagram Coding

- Diagram Editing

Evaluation on Diagram Generation

DiagramAgent's Code Agent demonstrates outstanding performance on the diagram generation task, achieving top scores across both code accuracy and image fidelity metrics. In terms of code quality, DiagramAgent achieves leading results with metrics such as Pass@1 (58.15), ROUGE-L (51.97), and CodeBLEU (86.83), among others, highlighting its capability to generate accurate and robust code representations. These results demonstrate the effectiveness of DiagramAgent in generating structured, accurate, and high-quality diagrams. For image quality, it also excels with PSNR (6.38), LPIPS (45.95), and others, confirming its ability to maintain high visual fidelity in generated diagrams.

| Model |

Size |

Pass@1↑ |

ROUGE-L↑ |

CodeBLEU↑ |

Edit Dist.↓ |

chrF↑ |

RUBY↑ |

CLIP-FID↓ |

LPIPS↓ |

PSNR↑ |

MS-SSIM↑ |

| Qwen2.5-Coder |

7B |

32.22 |

41.94 |

82.58 |

83.45 |

38.14 |

28.09 |

18.90 |

60.54 |

3.72 |

13.04 |

| DeepSeek-Coder |

33B |

55.56 |

44.26 |

83.29 |

81.85 |

42.01 |

30.55 |

15.49 |

60.99 |

6.02 |

19.80 |

| Code-Llama |

34B |

8.89 |

22.92 |

76.78 |

95.89 |

28.77 |

13.60 |

30.12 |

59.80 |

0.89 |

2.32 |

| WizardCoder |

15B |

28.89 |

29.93 |

78.96 |

91.30 |

31.38 |

19.73 |

27.38 |

55.96 |

3.36 |

11.66 |

| Codegeex4-all |

9B |

49.63 |

42.14 |

82.94 |

86.31 |

41.36 |

28.69 |

13.86 |

61.08 |

5.48 |

17.37 |

| Starcoder2 |

15B |

27.41 |

26.49 |

78.56 |

90.67 |

25.98 |

17.74 |

31.63 |

56.54 |

3.11 |

10.53 |

| Yi-Coder |

9B |

37.04 |

41.38 |

82.46 |

83.91 |

39.20 |

28.00 |

22.40 |

57.10 |

3.91 |

14.11 |

| Llama-3.1 |

8B |

33.58 |

37.04 |

80.45 |

88.24 |

36.80 |

24.79 |

17.91 |

58.80 |

3.78 |

11.94 |

| Baichuan2 |

13B |

16.30 |

33.28 |

79.94 |

87.96 |

31.83 |

21.51 |

23.43 |

61.49 |

1.81 |

4.94 |

| Internlm2_5 |

20B |

34.44 |

39.45 |

81.79 |

87.00 |

38.44 |

26.21 |

24.56 |

56.81 |

3.91 |

13.39 |

| Yi-1.5 |

34B |

35.19 |

42.56 |

82.91 |

85.52 |

42.03 |

28.43 |

20.03 |

58.04 |

3.82 |

12.83 |

| Qwen2 |

7B |

41.48 |

41.74 |

82.49 |

84.86 |

39.72 |

27.93 |

15.57 |

58.89 |

4.60 |

15.48 |

| GPT-4o |

- |

49.81 |

44.59 |

82.83 |

85.17 |

43.83 |

30.08 |

13.26 |

63.07 |

5.56 |

18.21 |

| DeepSeek V2.5 |

- |

54.44 |

43.00 |

82.83 |

85.67 |

43.63 |

28.75 |

13.32 |

62.32 |

5.56 |

16.98 |

| GLM-4-plus |

- |

42.96 |

46.42 |

83.91 |

82.40 |

44.51 |

32.13 |

14.70 |

63.38 |

4.47 |

13.89 |

| Gemini |

- |

43.23 |

44.86 |

82.37 |

84.44 |

43.75 |

30.46 |

21.69 |

54.93 |

3.16 |

18.70 |

| DiagramAgent |

7B |

58.15 |

51.97 |

86.83 |

74.62 |

53.49 |

39.71 |

11.16 |

45.95 |

6.38 |

24.78 |

Main results for diagram generation (Code Agent). The best result in each metric is bolded.

Evaluation on Diagram Coding

DiagramAgent's model, configured with compiler-based debugging followed by GPT-4o verification, achieves the highest performance across several metrics in the diagram coding task. Key metrics include Pass@1 (68.89), ROUGE-L (48.99), codeBLEU (84.64), demonstrating DiagramAgent's effectiveness in generating high-quality code from images. Compared to both open-source models like Qwen2-VL-7B-Instruct and closed-source models such as GPT-4o, DiagramAgent consistently excels, highlighting its robustness in tasks requiring precise visual-to-code translation.

Main results for diagram coding task (Diagram-to-Code Agent). The best result in each metric is bolded.

| Model |

Size |

Pass@1↑ |

ROUGE-L↑ |

codeBLEU↑ |

Edit Dist.↓ |

chrF↑ |

RUBY↑ |

| Yi-VL |

34B |

2.22 |

20.01 |

70.57 |

95.43 |

11.68 |

12.53 |

| Qwen2-VL |

8B |

28.89 |

31.74 |

80.04 |

88.13 |

28.39 |

21.21 |

| Internlm-xcomposer2.5 |

7B |

3.33 |

28.47 |

77.35 |

92.35 |

18.74 |

17.97 |

| Llama-3.2-Vision |

11B |

27.78 |

21.94 |

75.37 |

92.92 |

16.37 |

13.95 |

| Phi-3.5-vision |

4B |

24.07 |

27.53 |

76.56 |

90.01 |

20.86 |

17.96 |

| Llava-v1.6 |

34B |

8.89 |

26.68 |

76.53 |

93.46 |

21.00 |

16.30 |

| Cogvlm2-llama3 |

19B |

3.70 |

14.42 |

70.72 |

97.07 |

8.27 |

8.91 |

| Deepseek-vl |

7B |

50.74 |

25.18 |

76.48 |

88.82 |

18.35 |

16.13 |

| GPT-4o |

- |

64.07 |

39.95 |

81.78 |

86.68 |

34.40 |

26.18 |

| GLM-4-plus |

- |

51.48 |

35.92 |

80.16 |

86.12 |

29.10 |

24.60 |

| Gemini-1.5-pro |

- |

17.78 |

38.66 |

80.75 |

88.05 |

30.00 |

25.62 |

| DiagramAgent |

7B |

68.89 |

48.99 |

84.64 |

72.74 |

46.98 |

37.46 |

Evaluation on Diagram Editing

DiagramAgent’s Code Agent achieves superior results on the diagram editing task, demonstrating exceptional performance in both code accuracy and image quality metrics. In terms of code generation, DiagramAgent leads with top scores in Pass@1 (98.00), ROUGE-L (98.41), and CodeBLEU (99.93), underscoring its capability for precise and reliable code outputs. For image quality, DiagramAgent also excels, achieving outstanding results in CLIP-FID (1.08), LPIPS (40.64), and MS-SSIM (97.00), although its PSNR (13.18) is slightly lower than WizardCoder, potentially due to DiagramAgent's focus on overall image fidelity rather than absolute sharpness. DiagramAgent consistently outperforms baseline models across comprehensive metrics, validating its strong adaptability and reliability.

Main results for diagram editing (Code Agent). The best result in each metric is bolded.

| Model |

Size |

Pass@1↑ |

ROUGE-L↑ |

CodeBLEU↑ |

Edit Dist.↓ |

chrF↑ |

RUBY↑ |

CLIP-FID↓ |

LPIPS↓ |

PSNR↑ |

MS-SSIM↑ |

| Qwen2.5-Coder-7B |

7B |

71.50 |

91.86 |

97.42 |

13.26 |

89.91 |

86.99 |

4.79 |

46.45 |

11.16 |

66.76 |

| DeepSeek-Coder-Instruct |

33B |

90.50 |

96.64 |

98.48 |

5.80 |

95.73 |

94.68 |

2.63 |

46.25 |

15.84 |

86.42 |

| Code-Llama |

34B |

87.00 |

52.51 |

92.55 |

50.96 |

65.83 |

40.22 |

4.95 |

44.62 |

24.10 |

82.42 |

| WizardCoder |

15B |

87.50 |

74.59 |

95.20 |

28.91 |

84.23 |

63.71 |

4.92 |

44.18 |

24.24 |

82.88 |

| Codegeex4-all |

9B |

90.00 |

96.73 |

98.71 |

5.39 |

95.99 |

95.69 |

1.93 |

43.43 |

11.47 |

92.35 |

| Starcoder2 |

15B |

41.00 |

21.34 |

90.28 |

80.79 |

34.04 |

14.36 |

9.44 |

44.76 |

11.50 |

37.93 |

| Yi-Coder |

9B |

81.50 |

96.03 |

98.08 |

7.00 |

95.43 |

93.41 |

2.68 |

45.28 |

13.05 |

78.59 |

| Llama-3.1-8B-Instruct |

8B |

24.00 |

50.85 |

89.20 |

55.86 |

57.03 |

44.52 |

14.06 |

48.59 |

4.09 |

21.37 |

| Baichuan2-13B-Chat |

13B |

39.50 |

82.07 |

92.51 |

30.60 |

82.33 |

75.16 |

10.04 |

44.80 |

6.50 |

37.06 |

| Internlm2_5-20b-chat |

20B |

57.00 |

84.31 |

95.57 |

21.13 |

87.98 |

77.90 |

6.58 |

43.85 |

12.14 |

55.96 |

| Yi-1.5-34B-chat |

34B |

90.50 |

96.64 |

98.38 |

6.85 |

95.78 |

94.52 |

2.13 |

45.86 |

16.06 |

85.70 |

| Qwen2-7B-Instruct |

7B |

81.50 |

91.51 |

96.40 |

17.87 |

91.34 |

87.63 |

3.72 |

44.87 |

15.59 |

76.67 |

| GPT-4o |

- |

92.42 |

96.22 |

97.73 |

7.31 |

95.49 |

94.50 |

1.89 |

43.53 |

14.23 |

88.43 |

| DeepSeek V2.5 |

- |

95.00 |

96.77 |

98.83 |

5.04 |

96.10 |

94.96 |

1.63 |

43.16 |

12.81 |

91.97 |

| GLM-4-plus |

- |

92.00 |

97.05 |

98.63 |

6.06 |

96.04 |

95.12 |

1.54 |

45.79 |

13.89 |

88.31 |

| Gemini |

- |

72.00 |

95.09 |

95.34 |

7.00 |

93.32 |

93.45 |

2.08 |

47.57 |

12.50 |

85.59 |

| DiagramAgent |

7B |

98.00 |

98.41 |

99.93 |

3.58 |

97.96 |

97.05 |

1.08 |

40.64 |

13.18 |

97.00 |